As

Zeke says, it seems to be a custom now for bloggers to post codes to grid GHCN data and calculate trends. Here's my offering. It's influenced by Jeff Id's

program, and particularly by Romanm's

Least Squares. In fact, it's an extension of Roman's offset method to the whole global set.

It's fairly different to the conventional approach. It doesn't actually do gridding, or (per Roman) calculate anomalies. It's probably the shortest of the codes, and runs quite fast.

A summary of this series of posts is here.

The linear model

I'll attach a pdf file explaining the mathematics in more detail (soon). But here's a sketch.

The monthly data, from v2.mean after averaging over duplicates, is in a big array x(S,m,y). The indices are station, month and year.

The model is that x can be represented in terms of a global temperature G(y) depending on year alone (not month or station) plus a local offset L(S,m) for each station S, with a seasonal component dependent on month m (but not year), plus error:

x(S,m,y) = G(y) + L(S,m) + eps(S,m,y)

I do a least squares weighted fit.

The weight function w(S,m,y) is inversely proportional to area density of stations, and of course, zero for (S,m,y) combinations where there is no data. So we're minimising (Latex-like notation)

Sum_{S,m,y} w(S,m,y)*(x(S,m,y) - G(y) - L(S,m))^2

Weighting

The weight function uses a grid. But it just counts stations in each cell - no explicit grid averaging is done. The count is for each month (and year). The cell area is divded by this count to give wt(S,m,y).

A conventional 5 deg x 5 lat/lon cell is used.

The design matrix system

Minimising LS gives the system

L(S,m)*Sum_{y} w(S,m,y) + Sum_{y} {w(S,m,y)*G(y)}

= Sum_{y} {w(S,m,y)*x(S,m,y)}

Sum_{S,m} {w(S,m,y)*L(S,m)} + G(y)*Sum_{S,m} w(S,m,y)

= Sum_{S,m} {w(S,m,y)*x(S,m,y)}

Regarding (S,m) as a single index, this is actually a symmetric system:

A1*L +B*G = R1

t(B)*L +A2*G = R2

A1 is the very big block, but is a diagonal matrix. t(B) is the transpose of B. A2 is diagonal too.

Solving

This can no doubt be solved (for local L and global temp G) using R's lm() type functions. Numerically, it is essential to take advantage of A1 being diagonal. I don't know how R can do that, and would appreciate advice.

I constructed a home-made block solver which was quite fast and apparently effective (although I have an idea for a faster version).

A wrinkle is that the matrix is singular, because you coould add any constant to the global temp G and subtract it from L. So I added an equation to fix the initial G to zero.

Testing

To test the code, I created a synthetic data file with the v2,mean format, but made up of just a trend with a sinusoidal temperature (same for all stations), with a random scattering of missing data. It should give an exact answer, and it did. The test code is included

here.

Results

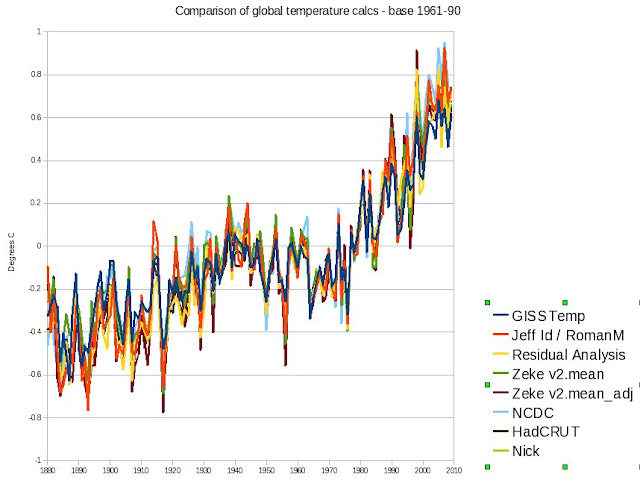

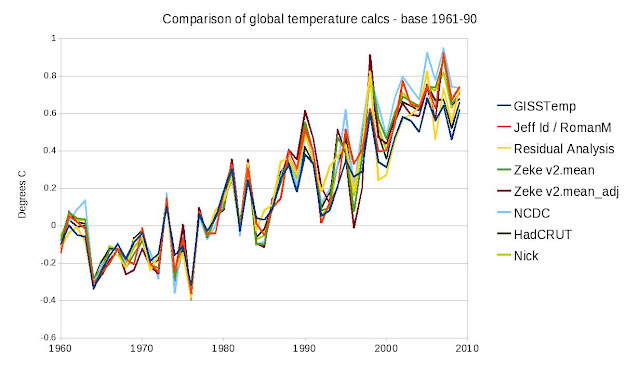

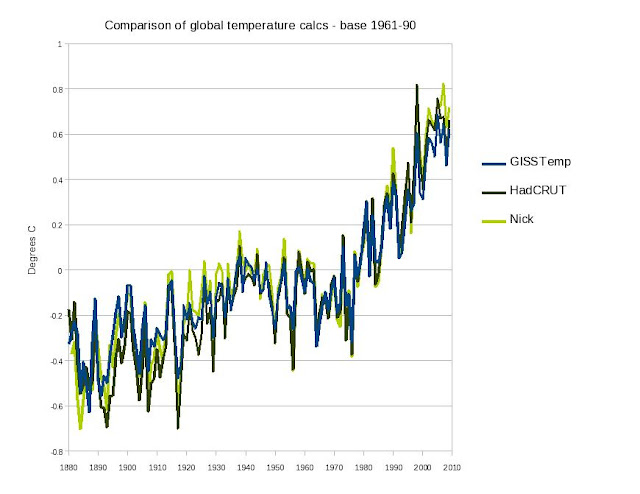

I haven't tried hemispheres, yet, just global. I'll show in a following post comparisons with HADCRUT etc. For the moment, I just looked at global temps and trends from 1900 to present, and 1978 to present. I was particularly interested to compare with

Jeff's value of 0.2476 C/dec. Here's my fig, with the result 0.2542 C.dec. (The AR1 correction is coming).

For 1900-2009, my slope is 0.0747 C/dec - well within

Zeke's range.

Code and next steps

The code, and the test code, is in the

repository.

In a future post, I'll show results for the local seasonal temperatures from the LS fit - they are pretty interesting too.